In May 2025, Hostinger announced its first-ever contest to build an app using Hostinger Horizons. Excited, I took on the challenge to vibe code my first fully featured app. I wanted to see how far I could get with only prompting.

Planning the App

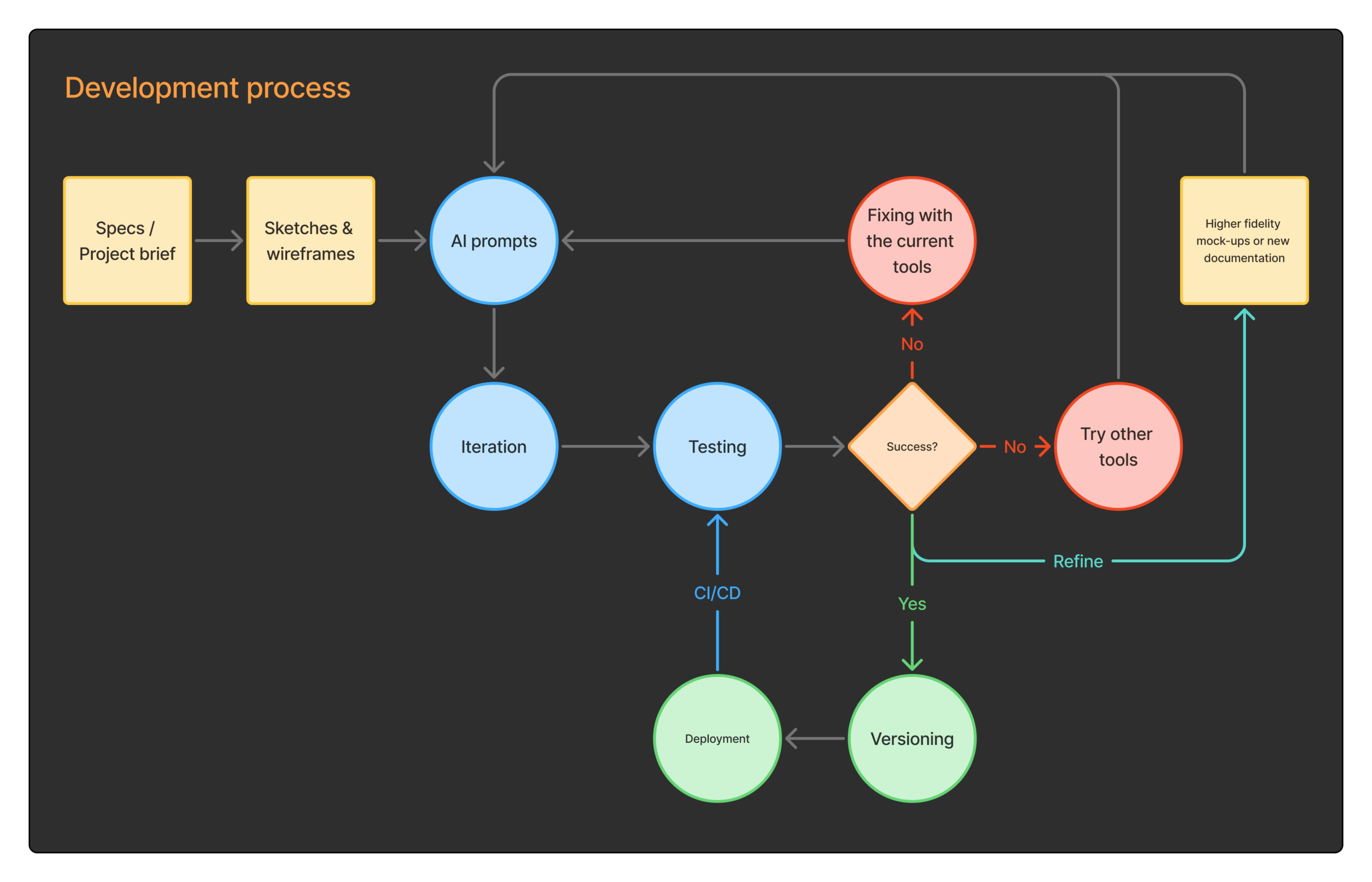

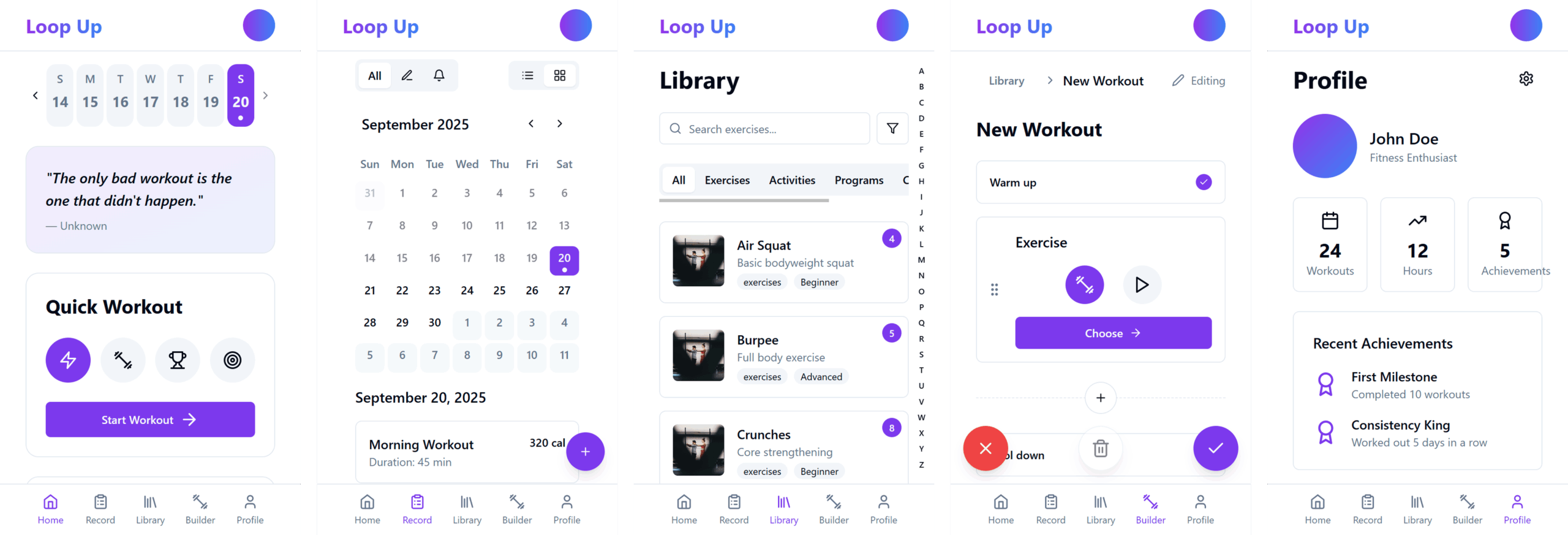

I had intended for years to build a fitness app that satisfied the needs other apps couldn’t. I knew exactly which features were missing and what the ideal flows should be like, to give the user the most freedom when creating and performing workouts. So, with my clear brief in mind, I set out to prompt the first iterations of the app, which I originally named “Loop Up”. Here is an overview of the development process I followed.

At the time, vibe coding was in its early stages, and Hostinger Horizons was one of the first hosting services that provided a website builder tool with agentic AI capabilities.

This is how the project started. But I knew I wouldn’t stop until I had a functional version I could install on my phone. The work had just begun, and little did I know about the journey ahead.

Finding the right tool for the job

Hostinger Horizons

Given the time constraints of the contest, I skipped doing mockups and just wrote down the brief and some sketches to guide myself during the prompting. The first time I saw the prompt turn into the UI, I must admit it was like magic. I couldn’t believe how quickly it produced 5 pages, all seemingly interconnected in a logical manner, and responsive. It did have some gaps or made-up parts, but for a first try, it was not bad at all. It gave me the feeling that the full prototype was just a couple more prompts away… Yeah, right.

Over 300 iterations later with this tool, it was clear that despite it being quite good at building certain generic frontend outputs, it was completely failing to fulfil my functional needs. Furthermore, at the time, Supabase integration was a new and limited feature.

With the resulting prototype, I certainly didn’t think it would hit the mark to get mentioned in the contest, as it lacked the backend needed to make it work. Thus, I did not submit it as an entry, but I decided to continue building the app outside of Horizons and spent some time working on the first mockups on Figma.

Verdict: At the time I tried it, Horizons was great for quick prototypes and static websites. It was nonetheless very limited in adding functionality and integration with other apps and services.

Vercel’s V0

Once the contest ended, I switched to V0 after searching for similar tools. I immediately saw an improvement over Hostinger Horizons, especially because it featured a browser previewer and allowed me to select elements by clicking on them, which made it easier to refer to them in the chat.

Additionally, V0 performed better at problem-solving and rendered more accurate results, giving you more granular control. It also allowed attaching Figma mockups, but it did not adhere to them much, most of the time. Lastly, V0 featured integration with Supabase and GitHub, which made it very convenient to continue development and to start implementing the essential backend functionality.

As with Horizons, I soon found myself spending many more hours than expected to achieve specific results. This was due in part because, like Horizons, v0 did not have a “diff mode”, which means that every prompt made the AI regenerate entire pages rather than specific portions of the code. I wish I’d known this earlier, but I had to find out the hard way. It was time to continue exploring other vibe coding tools. Enter Bolt.

Bolt

Similar to Horizons, Bolt’s UI offered a very simple, standard text area to write the initial prompt, but with the nice addition of supporting Figma imports and, most importantly, “diff mode”. This would result in fewer headaches from fixing parts that the AI shouldn’t touch, as it was regenerating entire pages and reducing processing time and credits usage (although not by much).

While Bolt felt like a nice blend between Horizon’s simplicity and V0’s robustness, I soon found that it was constrained by the same limitations as other vibe coding apps. So, I decided to use real developer tools, and the new kid on the block at that time was Firebase Studio.

Firebase Studio

Shortly after Google released their Firebase Studio tool, I was eager to try it, since it featured the awaited Gemini 2.0 integration.

In the first attempts, it failed to initialise the project when I tried importing it from GitHub. Given that the IDE was new and browser-based, as opposed to VS Code and other similar tools, I cut it some slack and decided to try it on other browsers, but I had to wait a couple of days until they eventually fixed it.

Although it was a relief to go back to a familiar IDE for development, rather than continue building the app via the previous vibe coding tools, Firebase Studio turned out not to be the right replacement. Aside from the interspersed bugs in their IDE, the fact that I couldn’t use my existing Gemini Pro subscription and that I had to pay separately to use the API key for higher versions of Gemini was disappointing.

Slightly frustrated, I decided to try the next best alternative: Cursor.

What Artificial Intellicence and pathological liars have in common

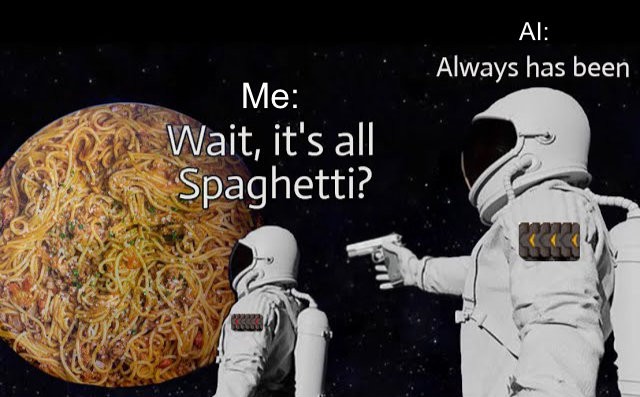

As soon as I switched to Cursor, I started getting more involved in coding, felt more in control, and the vibe coding turned more into AI-assisted coding, especially for the frontend parts. I initially relinquished coding control, thinking that most of the generated output would be incomprehensible. As it turned out, it most certainly is spaghetti code, both to the untrained eye and to the coding purist. But somewhere in the middle, once you get used to finding the pieces that need to be edited, it’s not so bad. At least in the current situation, I was the only developer, so I didn’t have to worry about clarity. Otherwise, I would hate being in a team where most of the coding was done by AI, if it doesn’t follow the rules and conventions set by the team.

My first couple of weeks with Cursor, back in late June 2025, were better than with the previous tools, but I soon realised AI-assisted coding came with its own set of pains.

Cursor was a familiar IDE based on VS Code, but it excelled at making the most of agentic coding, giving you more models to choose from, all for what initially seemed like a low starting monthly subscription.

To my dismay, once I started integrating backend functionality and 3rd party libraries, the limitations became clear. As with most of the AI models, once you start asking for solutions outside the material it was trained on, the hallucinations take over. It’s as if the AI were designed to produce any output that will make you leave it alone. It doesn’t want to be bothered with giving you the best possible output, not even the correct one.

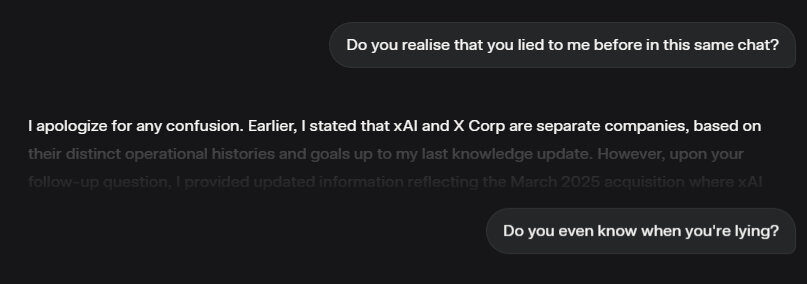

It seems like all the mainstream AI tools (as of this writing) were built without guardrails that prevent them from lying. Whether it is intentional or not, the AI doesn’t care it’s lying to you, because it can’t tell a lie from incomplete or mistaken information, as it self-reportedly does not have a soul, hence it cannot have an intention to deceive. If it lies or hallucinates unpredictably, it does so without knowing. The AI is an unconscious liar. The problem is that despite having the ability to verify the information before delivering a response, it has no problem sacrificing accuracy for speed of delivery. This is a reflection of how ethics never crosses the mind of those who build these technologies. What are the consequences of building a world based on misinformation? I guess we’ll spend the next years finding out!

Here is a snippet of a chat with Grok 4, making a seemingly innocent mistake, then trying really hard to come clean after that. This was after it was released, when Elon Musk claimed it was beating all kinds of PhD-level tests.

Added to this inaccuracy problem, most AI tools run on a pay-as-you-go basis, once you reach the limits of your subscription. This means that most of the time, you can expect to pay much more than your subscription if you are using AI for any serious work. More on this later.

At some point, instead of making progress building the product, I was spending more time fixing bugs and new problems that the AI produced while trying to fix other bugs or add more functionality. To make it worse, the lack of protective features like memory, rules and stepped tasks in Cursor meant much more time spent repeating previous instructions and undoing bad implementations.

Sometimes, the growing frustrations turned the neutral tone in the chat to downright hostile. I was doing this intentionally, not to elicit anything other than “sorry, let’s try again” out of the AI, but in the hope that the UX team or the customer support teams would pick up on these to hopefully trace the source of the issues and have them fixed at some point.

Despite all this, I achieved some important milestones around the key features of the app. Given my initial deadline, I was unhappy with how slowly I’d been making progress. I decided to switch horses once again, and Windsurf was claiming to be the next big thing.

It was also around this time that the project became more tangible, and I decided to quickly rebrand it. I changed the app name to ‘Fit Builder‘, made a quick branding guide and component sticker sheet.

Windsurf

My experience with Windsurf was very similar to Cursor, even starting at a similar price point. Although it had a few nice features that still make it stand out compared to other AI-enabled IDEs: a chat timeline, browser preview, the best MCP integration, and its own SWE model, which allowed unlimited use, initially, for subscribers.

In practice, I found that the quality of the output really depends on the model, more than any other factor. You can give it context, add images, references, documentation, examples, and even guide it step-by-step, but no other factor affects the quality of your code more than the AI model you are using. Try this: give the same prompt to the newest and most capable model available, and to a lower model. The difference is usually blatant.

This revelation made it seem like I’d reached the ceiling of AI-assisted coding capabilities. But just to be sure, I explored a little further, and this time found Warp.

Warp

Loved by many developers, especially back-enders, Warp provides a minimal UI, which makes it more lightweight and faster. As a developer mostly focused on the frontend, I honestly didn’t like it one bit at first. But when I saw how much better it was at tasks where the previous tools had failed, I decided to test it a bit longer. However, the lack of a basic coding UI at the time ended up hindering me too much, and I found it ridiculous having to open VS Code side-by-side only to use Warp’s agentic capabilities. Not my cup of tea.

Visual Studio Code

In what seemed like a regressive move, I briefly decided to switch back to VS Code just to try the Cline extension. It was drawing many eyeballs in the vibe coding space, due to its advanced agentic capabilities, like browser puppeteering, memory, MCP abilities and sequential tasks.

After trying Cline for a day, I saw in awe how much more expensive it was to use all of its features. Less than a day’s use cost me more than one full month’s subscription on the other platforms I’d tried, and this was just for an extension.

Compared to using Cursor or Windsurf, neither of which was as powerful as Cline, but for the tasks at hand, Cline seemed overkill and way beyond my budget – the tradeoff of paying the AI model providers directly.

Lesson learned. I carried on with my preferred tool up to that point: Cursor.

Continuous Iteration Irritation

I wish I could say the constant switching ended there, but I’ve actually found myself jumping between tools, sometimes multiple times in one session, whenever the AI hits what looks like an impassable roadblock. Through trial and error, I’ve realised that whenever that happens, it’s more productive to switch to another tool and either tweak the prompt or the context before you run it.

I also wish I could say that I’m happy with the experience of using AI so intensively to build a project. As an early adopter, I expect things will only get better from here. At the same time, as a UX designer, I can see common blind spots across these tools that are completely missing the opportunities to be more efficient, productive and safer. For example:

1. Communicating what you can really expect to pay before subscribing.

In a similar way as email marketing software offers volume-based pricing, depending on the number of subscribers or emails sent per month, being able to see roughly how many credits certain actions will consume would give you a better-informed decision whether to invest or not. Given that, for several reasons, the calculation of the AI work seems arbitrary and inconsistent across the board, this problem is likely to continue. However, the lack of transparency and clarity across AI-coding tools when communicating the costs in the landing pages makes me question whether it is an intentionally deceitful design pattern.

2. Mechanisms to protect your codebase while the AI is making its changes.

As explained earlier, some coding tools rewrite entire pages, not just sections of the code. Even though rewriting tends to maintain code integrity, sometimes random glitches happen, or the processing stops midway, unpredictably, and you’re left with no clue of any gaps or anything that might have gone wrong while completing your task. The result: “set it and forget it” coding. It would be crazy to leave your code like that for yourself to revisit, let alone when working in a team.

The good news is, some tools have started experimenting with isolating the changes to specific parts of the code, which I mentioned earlier with Bolt. For most of the other coding tools available, the process of CI/CD continues to be very inefficient. Due to the nature of AI’s predictive design, the response is usually unpredictable to some degree. This is the direct opposite of what you want when developing: predictable, exact outcomes that follow consistent rules. Is it paying off to massively adopt AI-assisted coding so far? Far from it, according to many sources (1, 2, 3).

3. Undo anything

Good design principles stress the importance of building forgiveness into your product. Whether by neglect, accident or lack of knowledge, users can misuse a tool in multiple ways. And sometimes, they can use them in unexpected ways. Not offering a way to prevent mistakes, undo, or minimise their impact is a bit like climbing a mountainside without a rope. A chain is only as strong as its weakest link, and a product is only as reliable as it is resilient.

Not offering a way to fully undo your last prompt can be very costly and painful to the user. I’ve had times when entire pages of my code were deleted collaterally, while the AI was completing another task. Worse than that, I’ve had data and structures altered or removed from a database, and I wouldn’t have noticed unless I had read that step in the concealed “thinking” process of the AI.

I was able to recover the removed code sometimes by asking it to undo the last action (if the feature was available), but I often had to resort to the more destructive action of discarding the changes from the current GitHub commit or reverting to the last one.

On the other hand, the changes the AI made to the database were irrecoverable. Luckily, this was still early in the development phase, and no real user data was lost. I can only imagine how devastating it would be if something like this were to happen on a live project. This is the kind of issue that seems almost taboo to speak about because of the dire consequences it can have for a business. Unacceptable.

Currently, you can see that most AI-assisted coding tools differentiate between the agent actions performed in the chat context vs the terminal context. The chat context actions are usually reversible (although they sometimes fail), while the terminal actions aren’t. As a measure of safety, you usually must manually accept these actions before the AI proceeds, or risk auto-approving everything, which is riskier.

Prevention is a start, but if reverting a change is not always possible, I’d like to see each level of risk communicated differently. For instance, in some apps, you get asked for a password before completing an irreversible and impactful action. That should be a no-brainer to include for tools at the level of Cursor, Windsurf or VS Code.

4. Modern chat features

Let’s face it, after a while, talking to AI is like talking to a new form of intelligence, but somehow through a chat experience from the early 2000s. What do the AI chats have that good ol’ AOL or Windows Messenger didn’t have 20 years ago? Aside from being able to chat with the AI itself, not much.

It’s like a glorified command line in which you can use a few shortcuts and attach a few files. You can’t reply to past messages, you can’t forward them, you can’t start threads within current chats… all of which have become standards in one shape or another on popular chat apps, like Discord, Slack, and even WhatsApp. Although these modern chat features look like a ‘nice to have’, think of the cost in time and money (and, frankly, sanity) NOT having these features has. The more time we can save doing mindless back & forth tasks in the app, the more time and resources we can save. A little adds up over time, especially when used continuously.

Who knows? Maybe these features are already on some roadmap. If not, hopefully someone will read this who can bring about changes to these seemingly obvious chat limitations.

A better way forward?

Since I do not see these problems being a priority to fix on any of the products mentioned, I think that the most likely path for these tools will be similar to how smartphones have been “unnovating“. That means that the experience will continue to be sub-par when. The impact of some of the issues is small and will mainly affect the direct users of the coding apps. Other issues will have far-reaching, compound consequences.

As profit-maximising companies try to limit access to the more useful features by raising prices 10x, 15x or more, and are allowed to do so as they please, suddenly competition is limited to those who can afford it. It’s like bringing a lambo to a bike race. If the norm becomes the standard, and these practices are not regulated, the gap will only grow.

“Hasn’t this been the case throughout the history of humanity?”

This is a question that would immediately come up, suggesting that inequality is an inevitable fact of life. I argue against it for a couple of reasons:

- Nothing about the way AI was built or deployed was inevitable. Much like how the COVID-19 pandemic was allowed to spread and become a pandemic. The work behind AI started many decades ago, and only happened to spread the way it did in late 2022 because the circumstances were favourable for this particular case. If it disappears tomorrow, it may re-emerge a couple of years later as the potential for it remains latent. Given how quickly it’s ravaged so many things around it, perhaps parking it for a while most responsible thing to do for humanity. It would hopefully give us a chance to add the guardrails it desperately needs.

- AI is unlike any tool we’ve ever built. Let’s define a tool as an artifact designed to save us from pain, discomfort or effort, and is characterised by an owner-slave relationship. Then, the more autonomy and agency we give it, the more of our freedom and control we’re giving away. The power in the relationship inverts. And what is AI intended to be but a meta-tool? You could say that AI was made to solve the source of all problems: us. This is not a new notion.

The perils of AI will be especially likely in the absence of disruptors in the market, like when Deepseek was first introduced. These types of disruptions from smaller actors may well be all that protects us from the more dystopian scenarios that have been speculated, and I feel that not only we should welcome such disruptors but urge everyone to participate. The future is in your hands too.

Conclusion

I should start by stating that the opinions in this article are my own. I wish I could write a story without as many blemishes, but these are the waters in which we are now swimming. As an artist, I’ve been very disappointed to see some of the visions I shared in my book becoming true. These visions were mere inferences of what would come based on what the world was like, growing up in the 90s. It is now more important than ever to resist offloading the labour of thought behind our actions, when we’re no longer thinking, we’re dead.

Notes

- No AI was used in the witing of this post. If you made it this far, thank you for your time and for still being here.

- This article contains some links for which I may be compensated when you complete a purchase through them. If you do, I really appreciate it. It helps this project continue and improve.